|

cyberbarf VOLUME 24 No 7 EXAMINE THE NET WAY OF LIFE FEBRUARY, 2026

Digital Illustration "Aquarius Journey" ©2026 Ski cyberbarf FEBRUARY, 2026 AI PREDICTIONS FOR 2026 HOW TO TRICK THE ALGORITHM iTOONS SOUTH KOREA'S NEW AI LAW QUICK BYTES FOUND BUT NOT LOST ON THE INTERNET WHETHER REPORT

©2026 Ski Words, Cartoons & Illustrations All Rights Reserved Worldwide Distributed by pindermedia.com, inc

cyberculture, commentary, cartoons, essays

|

|

cyberbarf AI PREDICTIONS FOR 2026 CRYSTAL BALL AI slop continues to manifest our daily lives. There are a surge of AI slop video channels. Social media feeds are filled with wild animal and baby interactions, unbelievable accident videos and the worst AI from Trump's own White House staff. Yes, the president has gone all-in on fake AI news releases. AI written articles and AI created fake videos are growing at a rapid pace. One of the most immediate effects of AI on information is volume. There is more content than ever, covering every possible topic. This trend will accelerate in 2026. While this accessibility can be beneficial, it also creates new challenges. Not all information is created with the same intent, depth, or accountability. AI-generated content is often designed to sound neutral and authoritative. It can summarize complex topics quickly, but it may lack nuance or context. Even if it can explain ideas clearly, it still includes subtle inaccuracies. Unlike human authors, AI systems do not evaluate truth or relevance. They predict language based on patterns. For readers, this means that consuming information now requires greater awareness. Understanding the source of a text helps determine how much trust to place in it and whether further verification is needed. You have to become your own editorial copy editor and fact checker. In 2026, the value of human perspective becomes more important, not less. Experience, judgment, and responsibility are qualities AI cannot replicate. The future of information will depend on how well these human elements are preserved within an AI-influenced environment. The biggest change in 2026 will be recognition. Readers are beginning to recognize this difference. They are paying more attention to credibility, sourcing, and transparency. Information is no longer judged solely by how well it is written, but by who stands behind it. As AI becomes more integrated into content creation, verification tools play a growing role. AI detection tools analyze writing patterns to estimate whether content was generated by a machine. While they do not determine accuracy, they provide valuable context about authorship. The more AI-detector software is used by the average person, the more they will be educated to question and verify what they read, see or hear. For example, did you read about the new movie PARASITE 2: UNDERCURRENTS (2026)? There was a slick movie poster with four original characters back in the slums of Seoul. But it was totally fake and without context because one of the characters was killed in original movie. Its director is currently making the most expensive animation movie in South Korea history with a another horror movie project being green lit. This fake movie is not listed on any actor wiki. In just a few minutes, one could verify that this post was nonsense. Whether it was created by a bot or a human trickster, we may never know. AI can only repeat and guess at what its output should be because it has no human context, experience or applied knowledge to get it right. In the 2026 Legal world, clients are looking to cut costs by pushing for cheaper AI research and integration. The transition from AI assistants to AI agents that execute multi-step factual tasks autonomously represents the year's most significant technical shift, according to Jones Walker. But there is a significant risk. The enthusiasm comes with persistent technical limitations. Stanford research found error rates of 17 percent for Lexis+ AI and 34 percent for Westlaw AI-Assisted Research â both legal-specific tools from established vendors. General-purpose models performed far worse. Over 700 court cases worldwide now involve AI hallucinations, with sanctions ranging from warnings to five-figure monetary penalties. For legal departments building AI governance frameworks, this means mandatory human review becomes more than just an ethical obligation. It's now a major liability. Also in the legal sector, 2026 may be the watershed for AI copyright litigation. Late last year, a federal judge ruled that LLMs cannot rely on the fair use defense when it illegally copied (pirated) the materials to train their AI generators. The initial illegal acts of piracy by scrapping copyrighted works for a commercial enterprise was a major win for the author's class action lawsuit. Reportedly, the case quickly settled for $1.5 billion. But the music publishers have filed a new action seeking more than $3 billion in damages. One thing that has not been reported but could destroy the AI business model: under US copyright law if infringement is found, the courts can order the infringing materials destroyed, i.e. erasing all the contaminated data bases giving the AI companies no generative outputs. cyberbarf HOW TO TRICK THE ALGORITHM TECHNOLOGY It was a slow and unassuming vanishing act. Prior favorite Korean golf videos no longer showed up on the YouTube homepage. Next, all YouTube specific golf channel brands. What replaced them were more car videos and reviews. Because it was a seamless algorithm change-over of my viewing habits, it was hard to see that I was being lead into another content field like a dairy farmer moving his cows to a new pasture. But it something that I did not ask or search for . . . just clicking on one of these new videos beget more of them. But also the data mining of search for new car reviews had to have been integrated into content thumbnails. Technology can now break your viewing habits in an undercover manner. In order to keep your favorites on the radar screen, you have to constantly re-train the updated algorithm to your preferences rather than it deciding for you. That means an occasional search of your favorite channel or podcast. That means watching your normal content on a more regular basis. It means you have to be your own active program director of your own content. In the TikTok and social media world, the advice is similar. Users who engage with content before and after posting seems to help. Also, interacting with videos in your niche, using relevant hashtags, and posting consistently can help the algorithm learn what your account is about. It takes a bit of testing, but it usually pays off over time. As Reason Magazine reasoned,“Each individual act of internet self-determination aggregates into something larger; distributed resistance to the corporate enclosure of digital space. Think of it like a ladder. Each rung represents a different level of engagement with how you consume, curate, and create your corner of the Internet You don't have to climb every rung, but knowing they exist changes what's possible. And every step you take moves you away from the grip of corporate algorithms and toward an Internet experience you can put to use for your own interests and needs.” You have to view AI algorithms that curate content from your homepage feed as a very smart, very stubborn digital pet. YouTube's algorithm serving nature videos after watching one winter weather storm clip is partially fixable. The three-dot menu hiding next to every video thumbnail contains your best weapon against algorithmic chaos: the Not Interested button which in Mozilla's real-world investigation found these controls prevented only 11 percent of unwanted recommendations. The frustrating truth remains: 39 percent of users who actively tried controlling recommendations reported zero improvement, Yahoo News reported. Who would have thought that the technology battle to control your eyeballs would include you, a human being, on the front lines hacking away at the digital horde?

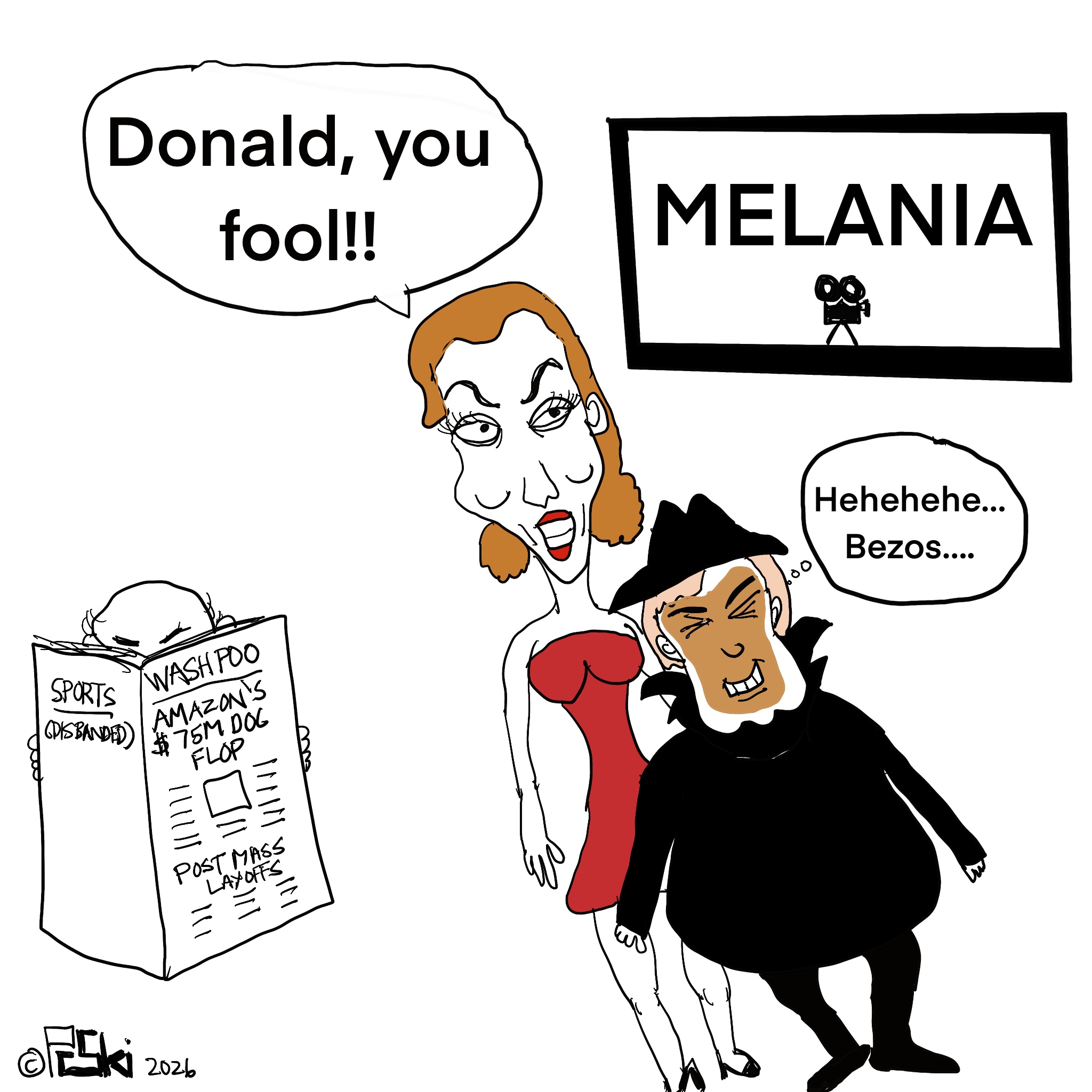

iToons

cyberbarf SOUTH KOREA'S NEW AI LAW NEWS Neal Mohan, YouTube Chief Executive Officer (CEO), identified the “war against artificial intelligence (AI) slop” as the top priority for this year. As criticism mounts over the worsening viewing experience on YouTube due to a surge in low-quality AI-generated content, referred to as AI slop, the platform is taking action. He stated, “YouTube is a central hub of culture, and as we enter 2026, we are witnessing a new era of innovation where the boundaries between creativity and technology are blurring. At this inflection point, bold challenges are necessary.” Mohan emphasized YouTube's commitment to combating AI slop this year. “It is becoming increasingly difficult to distinguish between AI-generated content and reality, a phenomenon particularly evident in deepfakes.” Concerns abou AI slop are also growing. Often called “digital pollution” as AI floods the platform, concerns are rising over declining platform trust, deteriorating content quality, and ecosystem disruption. Notably, South Korea is the country with the highest consumption of AI slop worldwide. Kapwing's analysis of the top 100 YouTube channels by country last November found that Korean-based AI slop channels accounted for 8.45 billion cumulative views the highest among surveyed countries. This significantly surpasses Pakistan (5.3 billion views) and the U.S. (3.4 billion views). South Korea just passed a new comprehensive AI law. Under South Korea's laws, companies must ensure there is human oversight in so-called high-impact AI which includes fields like nuclear safety, the production of drinking water, transport, healthcare and financial uses such as credit evaluation and loan screening. Other rules stipulate that companies must give users advance notice about products or services using high-impact or generative AI, and provide clear labeling when AI-generated output is difficult to distinguish from reality. The Ministry of Science and ICT has said the legal framework was designed to promote AI adoption while building a foundation of safety and trust. However, the act's provisions and enforcement may take years to actually be implemented by the government. As the debate over the creative and ethical value of using AI to generate video rages on, users are getting interesting results out of the machine, and artist-led AI content is gaining respect in some areas. Top film schools now offer courses on the use and ethics of AI in film production, and the world's best-known brands are utilizing AI in their creative processes, albeit with mixed results. Sadly, others are gaming the novelty of AI's prompt and click content, using these engines to churn out vast quantities of AI slop. Wiktionary defines a slopper as Someone who is overreliant on generative AI tools such as ChatGPT; a producer of AI slop. Along with the proliferation of brain rot videos online, sloppers are making it tough for principled and talented creators to get their videos seen. The website lapwing.com did a study of AI video content. It found Spain's trending AI slop channels have a combined 20.22 million subscribers, the most of any country. In South Korea, the trending AI slop channels have amassed 8.45 billion views. The AI slop channel with the most views is India's Bandar Apna Dost (2.07 billion views). The channel has estimated annual earnings of $4,251,500. If there is a lazy way to make money, AI-generative programs are the means to that end. It was reported that 79 percent of viewers cannot recognize AI slop from actual human produced content. This plays right into the hands of the slop makers. More platforms and web sites are demanding that AI content be labeled as such. The music industry is being overwhelmed by AI “artists” who are drowning out indie artists and major label acts. The avalanche of AI songs exceed 100,000 daily. How can anyone create a following or be followed when the music platform playlist creators are being spoofed by non-artists? If we have food labeling laws, why not content creation label laws? cyberbarf QUICK BYTES CYBERCULTURE NEW TIKTOK OWNERS NIGHTMARES. Reason Magazine reported that TikTok creators' worst nightmares seemed to be coming true. Just a few days after the company announced a deal to spin off US operations from TikTok's Chinese-affiliated parent company, ByteDance, some people posting political content started seeing zero views on their posts. And this attention deficit was especially stark on videos about Immigration and Customs Enforcement (ICE) actions in Minneapolis, some creators alleged. For many TikTok creators, it was confirmation that the new investors were intent on doing the Trump administration's bidding like a Russian state-controlled media. But the new platform owner responded by saying low viewership counts were caused by technical difficulties following a power outage at a US data center. People have a right to be suspicious of government influence over private media companies. ALCATRAZ HOMESTEAD. The New York Post reported an adventurous coyote that has been living on Alcatraz island since paddling more than a mile across the San Francisco Bay. Naturalists found the coyote much fatter than before thanks to the former prison island becoming all-you-can-eat bird buffet. The coyote is thriving on the 22-acre island but it is unclear why he swam across to a deserted island. However, park officials are concerned about a wild animal living in an area frequented by tourists. Officials are discussing plans to remove the coyote from the island. COLLEGE DAYS. Everyone who went to college probably had a cheap food joint near campus that was a student institution. It is probably universal college experience. In South Korea, Korea University is launching a fundraiser for a planned 500 million won ($350,000) scholarship to honor Lee Young-chul, who sold burgers for just 1,000 won (70 cents) outside the campus for more than two decades. Lee died late last year. According to the university, the scholarship aims to provide living expense support for students facing financial hardship, much like Lee did through his burgers. For decades, Lee generously filled buns layered with stir-fried pork, cabbage and sauce for just 1,000 won, it was as much a source of comfort as it was a meal for students on tight budgets. He was so familiar and woven into campus life that students would take graduation photos with him at his store. There was no mention that he was an alumni, but just a small store owner whose kindness was a lesson learned outside a classroom. SHOOT UP KIDS. Is social media a drug? Last month, the first high-profile lawsuit that could set meaningful precedent in the hundreds of other complaints goes to trial. That lawsuit documents the case of a 19-year-old woman who hopes the jury will agree that Meta and YouTube caused psychological harm by designing features like infinite scroll and autoplay to push her down a path that she alleged triggered depression, anxiety, self-harm, and suicidality. TikTok and Snapchat were also targeted by the lawsuit, but both have settled, Bloomberg reported. Plaintiff attorneys seek deep pocket targets to attempt to fix social ills. But is there some level of self-accountability missing here? NO BISON LOVE. The Bureau of Land Management does not like Bison herds. An ambitious and privately funded project to create a 5,000-square-mile prairie reserve where buffalo may roam and antelope play in eastern Montana is being stymied by an obtuse new ruling by the Bureau of Land Management (BLM). The goal of the American Prairie nonprofit is to re-create a prairie ecosystem one- and-a-half times the size of Yellowstone National Park that can eventually support a free-ranging herd of 5,000 bison. Since bison, like cows, are ruminants, it should not matter whether one or the other species is grazing on the landscape. In fact, this was the conclusion that the BLM reached back in 2022 when it approved American Prairie's proposal. But as Reason Magazine reported the BLM's decision to treat grazing bison like grazing cows was opposed by the Montana Stockgrowers Association, with the organization arguing that bison are not livestock under the relevant federal regulations. The new Secretary of the Interior Doug Burgum ordered in December 2025 that the BLM reconsider its 2022 decision that bison may graze on BLM allotments. The new BLM decision issued last month now finds that bison are not cows, sheep, horses, burros, or goats and therefore are not domestic livestock according to federal regulations. Mogul Ted Turner when he was alive owned the most land in Montana. He used a good portion to re-introduce bison on his land as a potential new domestic meat source. I guess he was way ahead of his time. And bison meat is a healthy choice. cyberbarf FOUND BUT NOT LOST ON THE INTERNET

Some cross-marketing ideas never should see the light of day. Croc's and Lego have teamed up to make Lego block shoes? How bulky and weird are these things?? We doubt there is a market for these shoes except in orthopedic offices treating ankle injuries. (Source: neatorama)

In another section of reality, there is another thing we really do not need. It is a cat hauler, of sorts. But it is a butt pack to carry around your kitten. It makes no sense. Someone has to push the cat into the pack, then zip it up. It hangs off your body in an awkward position. When you get to your destination, you need someone else (a stranger?) to unzip your cargo who has had a thrilling jaunt bouncing off your buttocks. Impractical. Dumb. Useless. Why not use a normal animal carrier? Or a back pack? Things pet owners dream up . . . (Source: facebook)

|

|

YOUR AGE IS JUST A NUMBER. |

|

|

|

|

|

cyberbarf THE WHETHER REPORT |

cyberbarf STATUS |

|

Question: Whether Minnesota ICE shootings will stop True administration behavior towards Immigration enforcement? |

* Educated Guess * Possible * Probable * Beyond a Reasonable Doubt * Doubtful * Vapor Dream |

|

Question: Whether Minnesota ICE shootings without strong Republican backlash give Democrats midterm election landslide? |

* Educated Guess * Possible * Probable * Beyond a Reasonable Doubt * Doubtful * Vapor Dream |

|

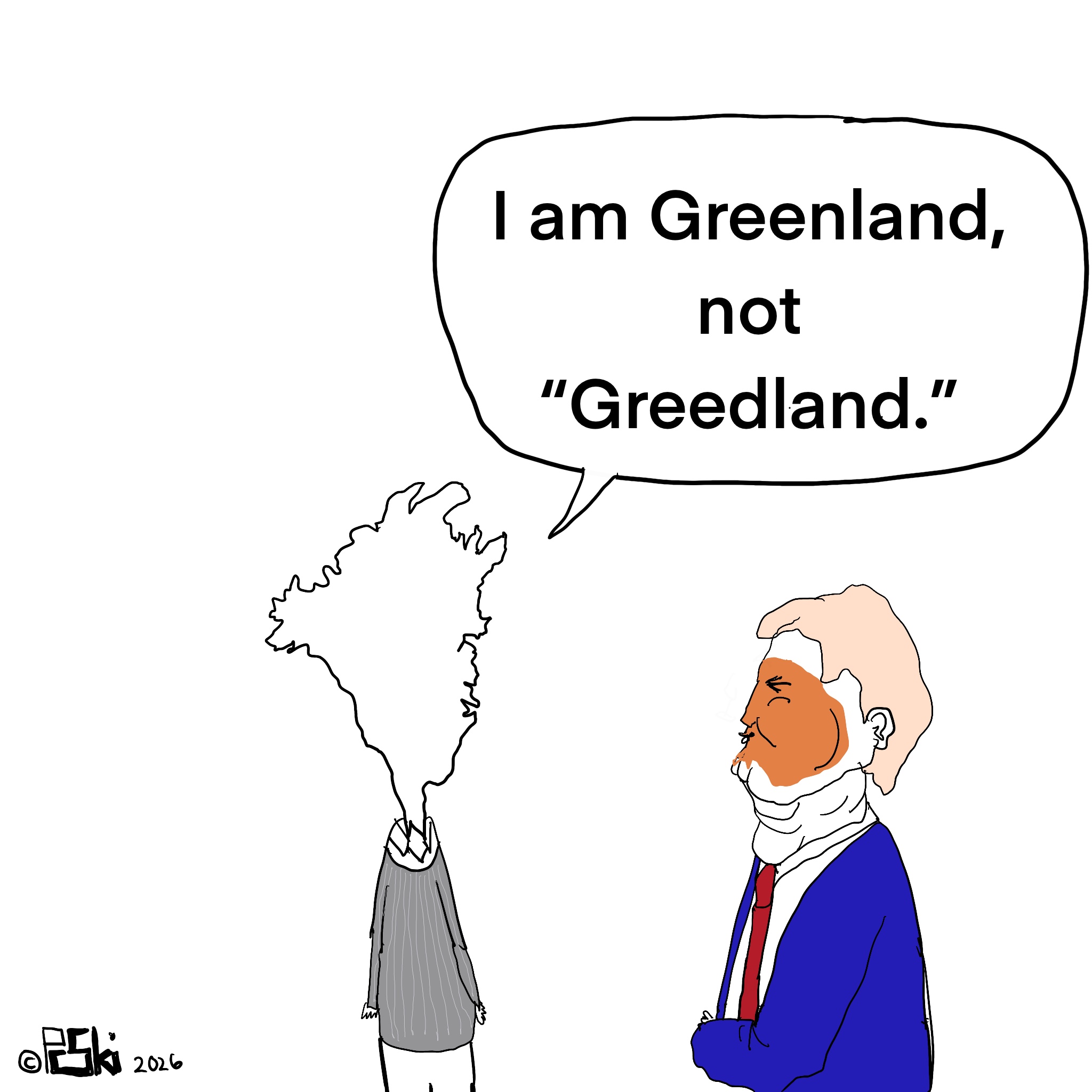

Question: Whether a survey which states only 4 percent approve of the president's position change Trump's plan to take over Greenland ? |

* Educated Guess * Possible * Probable * Beyond a Reasonable Doubt * Doubtful * Vapor Dream |

|

OUR STORE IS STILL UNDER RE-CONSTRUCTION. THE WAIT IS ALMOST OVER. APOLOGIES.

LADIES' JAMS MULTIPLE STYLES-COLORS

PRICES TO SUBJECT TO CHANGE PLEASE REVIEW E-STORE SITE FOR CURRENT SALES

|

PRICES SUBJECT TO CHANGE; PLEASE CHECK STORE THANK YOU FOR YOUR SUPPORT!

NEW REAL NEWS KOMIX!

|

|

cyberbarf

Distribution ©2001-2026 SKI/pindermedia.com, inc.

All Ski graphics, designs, cartoons and images copyrighted.

All Rights Reserved Worldwide.